diff options

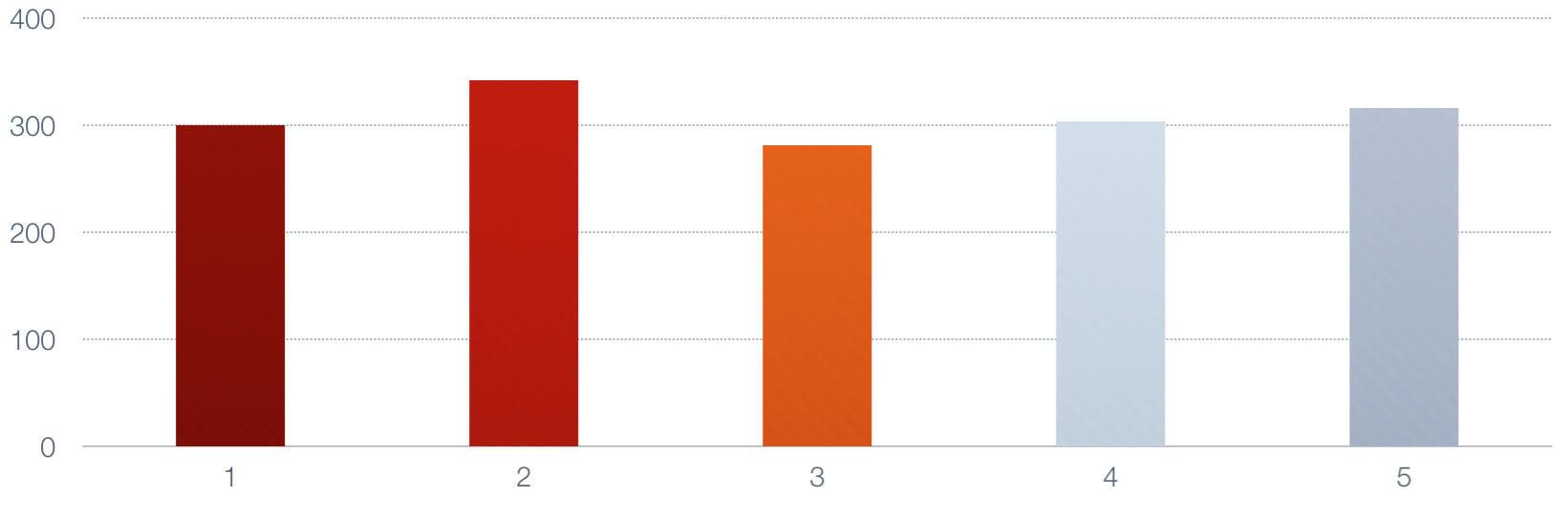

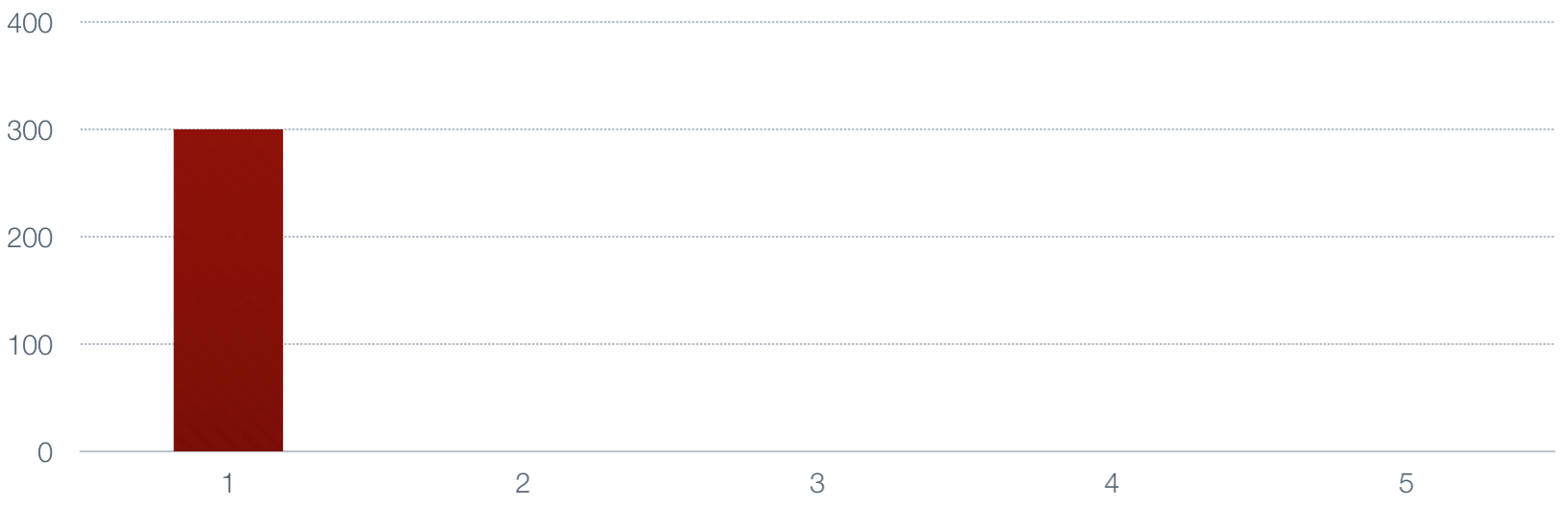

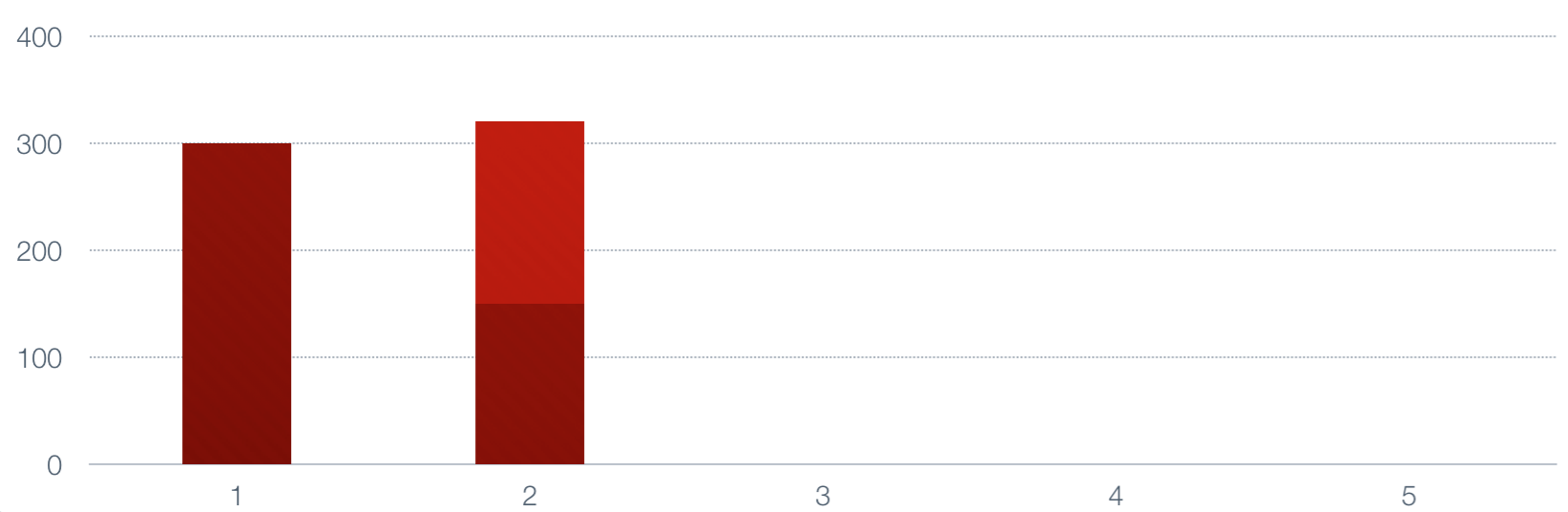

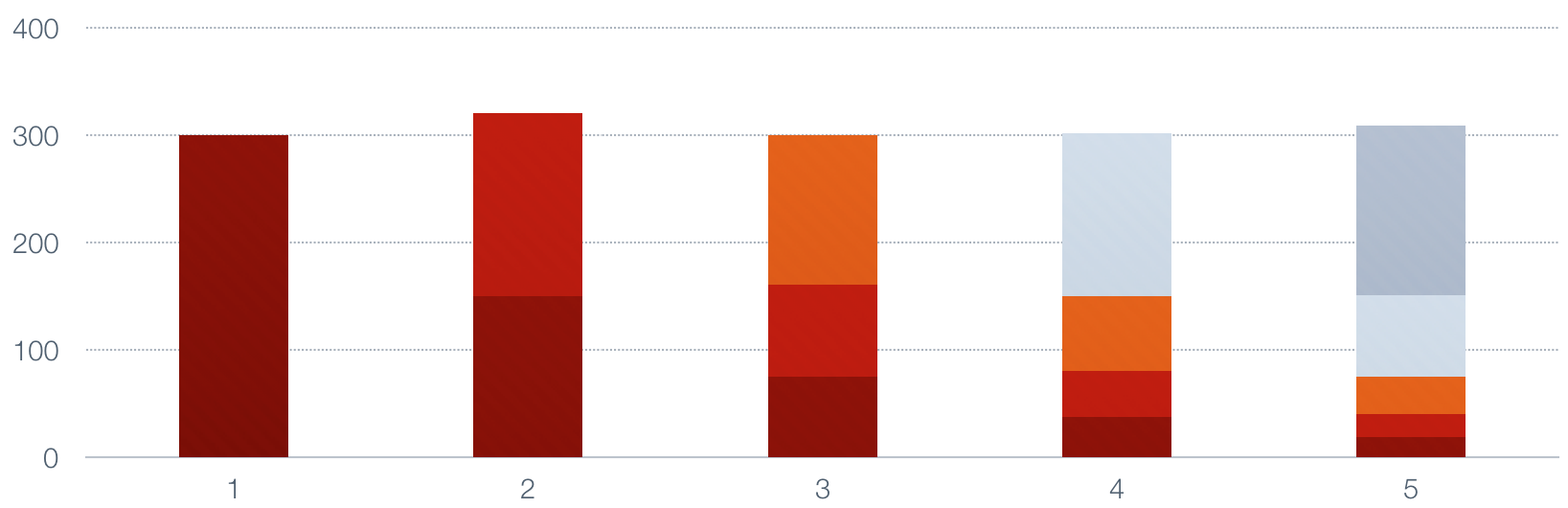

520 files changed, 42278 insertions, 15706 deletions